Best Practices for Documenting and Managing Employee Knowledge in HR

Devin Partida

best-practices-for-documenting-and-managing-employee-knowledge-in-hr

April 16, 2025

The Biggest Challenge of Knowledge Management (KM)

Dr. Mustafa Hafizoglu; Length: ~400 words, 2 min read.

the-biggest-challenge-of-knowledge-management-km

April 15, 2025

How Data Governance Enhances the Quality of Organizational Knowledge

Devin Partida

how-data-governance-enhances-the-quality-of-organizational-knowledge

April 11, 2025

Why Change Management Needs Knowledge Management: A Strategic Partnership for Sustainable Transformation

Ekta Sachania

why-change-management-needs-knowledge-management-a-strategic-partnership-for-sustainable-transformation

April 7, 2025

Unlocking the Power of Knowledge Graphs for AI Pre-Sales Success

Ekta Sachania

unlocking-the-power-of-knowledge-graphs-for-ai-pre-sales-success

April 1, 2025

The Impact of AI on Data Security Within Knowledge Management Systems

Guest Blogger Devin Partida

the-impact-of-ai-on-data-security-within-knowledge-management-systems

March 26, 2025

Mapping Knowledge, Bridging Gaps: A Step-by-Step Guide to Building a Knowledge Graph

mapping-knowledge-bridging-gaps-a-step-by-step-guide-to-building-a-knowledge-graph

March 23, 2025

From Chaos to Clarity: How Knowledge Management Powers Winning Proposals in Presales

from-chaos-to-clarity-how-knowledge-management-powers-winning-proposals-in-presales

March 12, 2025

Integrating Text Analysis Tools to Streamline Document Management Processes

Devin Partida

integrating-text-analysis-tools-to-streamline-document-management-processes

March 11, 2025

Optimizing Hardware Setups for Effective Knowledge Management Systems

Devin Partida

optimizing-hardware-setups-for-effective-knowledge-management-systems

March 10, 2025

Knowledge Management in the Age of AI: Challenges and Opportunities

Harikrishna Kundariya

knowledge-management-in-the-age-of-ai-challenges-and-opportunities

March 3, 2025

Escaping the Definition Trap: Leveraging Knowledge for Clarity and Success

Dr. Mustafa Hafizoglu

escaping-the-definition-trap-leveraging-knowledge-for-clarity-and-success

March 3, 2025

From Data to Wisdom: Using AI to Strengthen Knowledge Management Strategies

Guest Blogger Amanda Winstead

from-data-to-wisdom-using-ai-to-strengthen-knowledge-management-strategies

February 13, 2025

Why Your Knowledge Management Strategy Needs an Upgrade: Key Signs and Solutions

KMI Guest Blogger Ekta Sachania

why-your-knowledge-management-strategy-needs-an-upgrade-key-signs-and-solutions-2

February 12, 2025

Enhancing Knowledge Management with Data Visibility

enhancing-knowledge-management-with-data-visibility

January 30, 2025

Why Your Knowledge Management Strategy Needs an Upgrade: Key Signs and Solutions

KMI Guest Blogger Ekta Sachania

why-your-knowledge-management-strategy-needs-an-upgrade-key-signs-and-solutions

January 24, 2025

Bridging the Gap: How Knowledge Managers Embody the Spirit of Trailblazers

In today’s fast-evolving and highly competitive global business landscape, organizations need leaders who can navigate change, drive innovation, and foster growth. Trailblazers are those rare individuals who create transformative pathways for others to follow. While the concept of a trailblazer often conjures up images of visionaries or entrepreneurs, it also aligns closely with the role of a Knowledge Manager. A Knowledge Manager (KM) is not just a custodian of information but a strategic driver of change, efficiency, and innovation.

KMI Top Blogger Ekta Sachania

bridging-the-gap-how-knowledge-managers-embody-the-spirit-of-trailblazers

January 13, 2025

How to Approach Knowledge Mapping for Upskilling Your Team

In today’s fast-evolving business landscape, upskilling teams is not just a goal—it’s a necessity. To align with organizational visions and navigate complex deployments or emerging challenges, it’s essential to understand your team’s current knowledge base, identify gaps, and create actionable strategies for growth. This is where knowledge mapping becomes an invaluable tool. Here’s a practical guide to get you started

how-to-approach-knowledge-mapping-for-upskilling-your-team

December 5, 2024

Leveraging Knowledge Management for Organizational Development

Organizations constantly need to adapt, innovate, and improve performance in the ever-evolving business landscape to stay competitive. At the core of this evolution lies Organizational Development (OD), a strategic approach to improving a company’s processes, culture, and adaptability. But how can organizations ensure these improvements are sustainable and impactful? The answer lies in integrating a robust Knowledge Management (KM) practice into the OD framework.

leveraging-knowledge-management-for-organizational-development

December 2, 2024

The Role of KM in Enhancing CX and DT Services

Organizations offering CX and DT services often deal with diverse teams across different regions or domains, each bringing its processes, tools, and approaches. KM acts as the bridge, ensuring that knowledge—whether explicit or tacit—flows seamlessly across teams to improve the overall service delivery. Here’s how introducing a knowledge management practice to support CX and digital transformation services can impact the service delivery...

Ekta Sachania

the-role-of-km-in-enhancing-cx-and-dt-services

November 19, 2024

VIrtual Team Members - The Pulse of Distance Work

The heart is a fascinating organ. It pulsates throughout the body, and you want the pulse because that means you are alive; The heart does the body work! The same applies to your business. VTMs are the bodies that do the work for your business and product success.

Dr. Cassandra Smith | Working at a Distance

virtual-team-members-the-pulse-of-distance-work-45e5d

September 2, 2015

What is a Learning Circle?

You may have experienced one-on-one coaching, maybe executive coaching, leadership, career, or life coaching. All of these are ways in which a professional coach helps an individual discover new insights, make coherent choices, and integrate new behaviors. It can be a very powerful way to make transformative lasting change in your life.

Jane Maliszewski, Coach

what-is-a-learning-circle

May 14, 2015

Digital Transformation & Productivity - Part II

Rebecka Isaksson | Director, KM Programs - Microsoft

digital-transformation-productivity-part-ii-2

April 20, 2016

The First 100 Days: How Good Was it For You?

Rooven Pakkiri - Consultant and Author, Decision Sourcing

the-first-100-days-how-good-was-it-for-you

February 5, 2015

New Year's Resolutions - Knowledge Management Edition

Zach Wahl | Enterprise Knowledge

new-year-s-resolutions-knowledge-management-edition

January 6, 2015

Being Social – Knowledge Management and Social Media

Dr. Anthony J. Rhem

being-social-knowledge-management-and-social-media

June 2, 2015

Sharing Hidden Knowledge - The Knowledge Jam Technique

sharing-hidden-knowledge-the-knowledge-jam-technique

February 22, 2015

Change and Knowledge in a Changing World

Anne Marie McEwan, CEO of The Smart Work Company, Ltd.

change-and-knowledge-in-a-changing-world

March 23, 2015

Tactics to Manage Fluid Knowledge

tactics-to-manage-fluid-knowledge

February 22, 2015

Creating an Environment for Housing KM

Rustin Diehl, JD, CKM

creating-an-environment-for-housing-km

April 1, 2015

I Went on Vacation, My Luggage Did Not

Jane Maliszewski

i-went-on-vacation-my-luggage-did-not

June 10, 2015

Change Management for Agile Projects

Katy Saulpaugh | Enterprise Knowledge

change-management-for-agile-projects

August 12, 2015

Before Knowledge Management and Working Out Loud - The Practice of Civility

Howard Cohen, CKM

before-knowledge-management-and-working-out-loud-the-practice-of-civility

June 18, 2015

Putting the Knowledge in Knowledge Bases

Zach Wahl | CEO, Enterprise Knowledge

putting-the-knowledge-in-knowledge-bases

June 25, 2015

Workplace Evolution – Tuning in to Worker Expectation

Rooven Pakkiri | Social Business Consultant

workplace-evolution-tuning-in-to-worker-expectation

October 28, 2015

Justifying KM

Rustin Diehl, JD, CKM | Innovation Trainer and Expert

justifying-km

June 30, 2015

What is Meant by Knowledge Management?

José Carlos Tenorio Favero

what-is-meant-by-knowledge-management

August 6, 2015

Driving Process Innovation - Part One

Alastair Ross | Director, Codexx Associates LTD

driving-process-innovation-part-one

July 16, 2015

Driving process innovation - Part Two

Alastair Ross | Director, Codexx Associates LTD

driving-process-innovation-part-two

July 27, 2015

Knowledge Management and the Sharepoint Era

Jose Carlos Tenorio Favero | Global Knowledge Management

knowledge-management-and-the-sharepoint-era

September 17, 2015

Why do Knowledge Management (KM) Programs and Projects Fail?

Dr. Anthony J. Rhem

why-do-knowledge-management-km-programs-and-projects-fail

September 22, 2015

VIrtual Team Members - The Pulse of Distance Work

Dr. Cassandra Smith | Working at a Distance

virtual-team-members-the-pulse-of-distance-work

September 2, 2015

How Will KM Certification Benefit My Career?

Marie Jeffrey

how-will-km-certification-benefit-my-career

April 6, 2016

To Social or Not to Social?

Rebecka Isaksson | Director, KM Programs - Microsoft

to-social-or-not-to-social

December 2, 2015

Collaboration is Fundamental in a Mobile-First, Cloud-First World

Rebecka Isaksson | Director, KM Programs - Microsoft

collaboration-is-fundamental-in-a-mobile-first-cloud-first-world

October 15, 2015

Human Capital - The Last Differentiator

Rooven Pakkiri | Social Business Consultant

human-capital-the-last-differentiator

September 30, 2015

Is KM a Science?

Lesley Crane, PhD

is-km-a-science

January 12, 2016

Do you want to be one of the 8% who achieves their New Year’s Resolution?

Jane Maliszewski, Founder - Vault Associates

do-you-want-to-be-one-of-the-8-who-achieves-their-new-year-s-resolution

January 6, 2016

Is KM a Science? (Part 2 of 2)

Lesley Crane, PhD

is-km-a-science-part-2-of-2

January 20, 2016

3 Steps to Developing a Practical Knowledge Management Strategy: Step #2 Define the Target State

Yanko Ivanov | Enterprise Knowledge

3-steps-to-developing-a-practical-knowledge-management-strategy-step-2-define-the-target-state

March 22, 2017

Is KM a Science? The Verdict

Lesley Crane, PhD

is-km-a-science-the-verdict

February 10, 2016

Learning from Dirt Bikes

Rustin Diehl, JD, CKM

learning-from-dirt-bikes

January 28, 2016

The Disruptive Future of Knowledge Management

Jose Carlos Tenorio Favero | @josecarloskm

the-disruptive-future-of-knowledge-management

August 22, 2016

Collaborative Knowledge Mapping

Manel Heredero | Managing Director, Werner Sobek - London

collaborative-knowledge-mapping

July 6, 2016

Digital Transformation & Productivity - Part II

Rebecka Isaksson | Director, KM Programs - Microsoft

digital-transformation-productivity-part-ii-1

May 18, 2016

Information Architecture and KM

Anthony J. Rhem

information-architecture-and-km

May 5, 2016

Why Is It So Hard to Find What I'm Looking For?

Rebecka Isaksson | Director, KM Programs - Microsoft

why-is-it-so-hard-to-find-what-i-m-looking-for

June 30, 2016

Foolish Knowledge: The Dunning-Kruger Effect

Rustin Diehl, JD, CKM

foolish-knowledge-the-dunning-kruger-effect

August 4, 2016

Design a User-Centric Taxonomy

Ben White | Enterprise Knowledge

design-a-user-centric-taxonomy

September 7, 2016

Controlling the Forgetting Curve with a Knowledge Management System

Sandra Lupanava | ScienceSoft

controlling-the-forgetting-curve-with-a-knowledge-management-system

February 22, 2017

The 4 Steps to Designing an Effective Taxonomy: Step #2 Make Sure Your Facets Are Consistent

Ben White | Enterprise Knowledge

the-4-steps-to-designing-an-effective-taxonomy-step-2-make-sure-your-facets-are-consistent

September 26, 2016

The 4 Steps to Designing an Effective Taxonomy: Step #3 Validate Your Taxonomy

Ben White | Enterprise Knowledge

the-4-steps-to-designing-an-effective-taxonomy-step-3-validate-your-taxonomy

October 13, 2016

Agile Taxonomy Maintenance

Angela Pitts | KM Expert - Enterprise Knowledge

agile-taxonomy-maintenance

November 8, 2016

Your KM Project Needs a Change Strategy

Katy Saulpaugh | Enterprise Knowledge

your-km-project-needs-a-change-strategy

December 6, 2016

Measure the Findability of Your Content

Ben White | Enterprise Knowledge

measure-the-findability-of-your-content

October 27, 2016

Knowledge Management in 2017

Zach Wahl | President and CEO, Enterprise Knowledge

knowledge-management-in-2017

January 25, 2017

How To Turn Employees Into Active Users Of Corporate Knowledge

Sandra Lupanava | ScienceSoft

how-to-turn-employees-into-active-users-of-corporate-knowledge

January 10, 2017

3 Steps to Developing a Practical Knowledge Management Strategy

Yanko Ivanov | Enterprise Knowledge

3-steps-to-developing-a-practical-knowledge-management-strategy

March 8, 2017

Knowledge Management of Structured and Unstructured Information

Joe Hilger | Enterprise Knowledge

knowledge-management-of-structured-and-unstructured-information

April 18, 2017

Video: KM and The Importance of Making Connections

Zach Wahl | President and CEO, Enterprise Knowledge

video-km-and-the-importance-of-making-connections

February 8, 2017

Are Internal Social Networks Ungovernable?

Craig St. Clair | Enterprise Knowledge

are-internal-social-networks-ungovernable

April 5, 2017

Agile Content Teams - Part 1: Ceremonies

Rebecca Wyatt | Enterprise Knowledge

agile-content-teams-part-1-ceremonies

May 17, 2017

Knowledge Change is not a Technology Project

Rebecka Isaksson | Director, KM Adoption and Strategy | Microsoft

knowledge-change-is-not-a-technology-project

June 7, 2017

Defeating High Employee Turnover with Knowledge Management Tools

Sandra Lupanava | ScienceSoft

defeating-high-employee-turnover-with-knowledge-management-tools

June 21, 2017

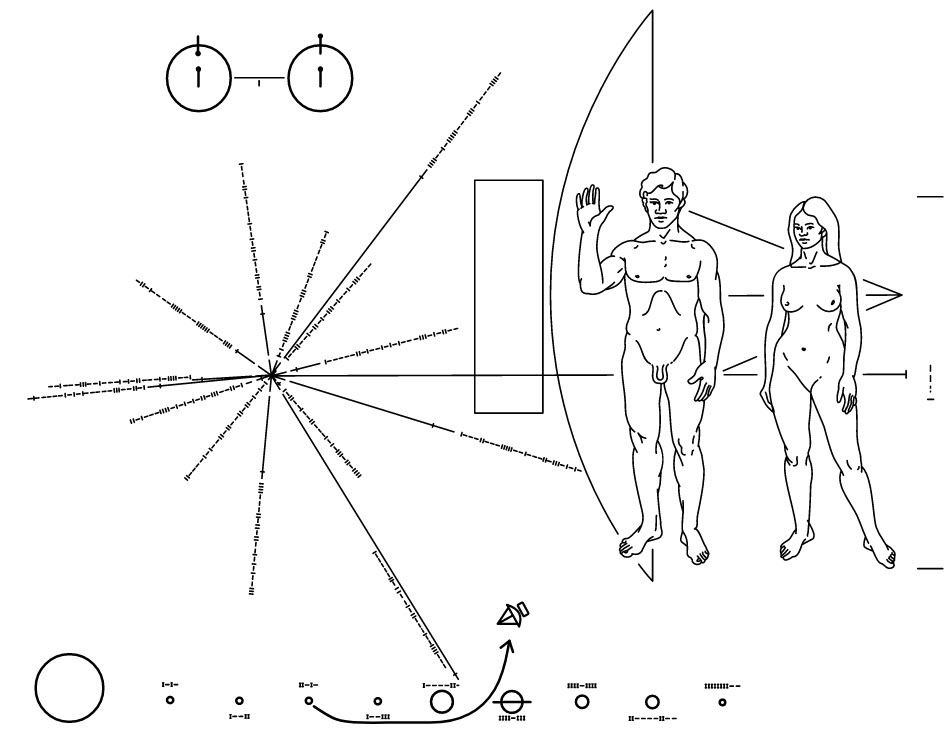

KM: Lessons Learned from Pioneer 10

Chris Holm, CKM | Director, Customer Success at Ellucian

km-lessons-learned-from-pioneer-10

July 10, 2017

Best Practices for Leading Change

Katy Saulpaugh | Enterprise Knowledge

best-practices-for-leading-change

May 4, 2017

Maximizing and Measuring User Adoption

Mary Little | Enterprise Knowledge LLC

maximizing-and-measuring-user-adoption

August 30, 2017

The Connection between Artificial Intelligence and Knowledge Management

Dr. Anthony J. Rhem | A.J. Rhem & Associates

the-connection-between-artificial-intelligence-and-knowledge-management

July 18, 2017

The Connection between Artificial Intelligence and Knowledge Management - Part 2

Dr. Anthony J. Rhem | A.J. Rhem & Associates

the-connection-between-artificial-intelligence-and-knowledge-management-part-2

July 31, 2017

The Connection between AI and KM - Part 3 - Cognitive Computing Technology

Anthony J. Rhem | AJ Rhem and Associates

the-connection-between-ai-and-km-part-3-cognitive-computing-technology

September 14, 2017

Video: KM Showcase 2019 Recap

Admin

video-km-showcase-2019-recap

April 26, 2019

KM ROI - A Look Inside an IT Company's KM Investment and Return

Dr. Randhir Pushpa, Guest Blogger and Parter of KMI

km-roi-a-look-inside-an-it-company-s-km-investment-and-return

November 3, 2020

Communication Techniques to Promote Adoption

Katy Saulpaugh | Enterprise Knowledge

communication-techniques-to-promote-adoption

September 27, 2017

Why People Fail to Share Knowledge

Zach Wahl | Enterprise Knowledge

why-people-fail-to-share-knowledge

October 11, 2017

Transforming an Employee Portal to a Digital Workspace

Craig St. Clair | Enterprise Knowledge

transforming-an-employee-portal-to-a-digital-workspace

November 29, 2017

Dancing With the Robots - the Rise of the Knowledge Curator

Rooven Pakkiri | Social KM Expert

dancing-with-the-robots-the-rise-of-the-knowledge-curator

January 31, 2018

Taking an Agile Approach to Adoption

Mary Little | Enterprise Knowledge LLC

taking-an-agile-approach-to-adoption

October 25, 2017

KMI Interviews with Recent CKM Students

Admin

kmi-interviews-with-recent-ckm-students

January 2, 2018

Information Architecture and Big Data Analytics

Dr. Anthony J. Rhem | A.J. Rhem & Associates

information-architecture-and-big-data-analytics

December 13, 2017

Measuring the Effectiveness of Your Knowledge Management Program

Anthony J. Rhem | AJ Rhem and Associates

measuring-the-effectiveness-of-your-knowledge-management-program

February 14, 2018

What is Knowledge Management and Why Is It Important?

Zach Wahl | President and CEO, Enterprise Knowledge

what-is-knowledge-management-and-why-is-it-important

April 3, 2018

Knowledge Management is about mindset and people - not technology

Rebecka Isaksson - Director, Knowledge Managment Adoption - at Microsoft

knowledge-management-is-about-mindset-and-people-not-technology

March 13, 2018

Can KM Be Your Superpower?

Vanessa DiMauro | Leader Networks

can-km-be-your-superpower

May 16, 2018

Conversational Leadership: 3 Steps to Improve Conversations

John Hovell | CEO and Co-Founder, STRATactical

conversational-leadership-3-steps-to-improve-conversations

April 16, 2018

Design Thinking and Taxonomy Design

Claire Brawdy | Principal, Enterprise Knowledge

design-thinking-and-taxonomy-design

May 2, 2018

Gaining Executive Buy-in for KM Initiatives

Mary Little, Senior Consultant, Enterprise Knowledge

gaining-executive-buy-in-for-km-initiatives

May 31, 2018

Why KM Efforts Fail

Zach Wahl | Ceo, Enterprise Knowledge

why-km-efforts-fail

July 11, 2018

Shipbuilding, Sailing, Community and KM

Luis Ortiz-Echevarria, MPH

shipbuilding-sailing-community-and-km

October 3, 2018

Tips from a Veteran Knowledge Management Practitioner

Admin

tips-from-a-veteran-knowledge-management-practitioner

June 11, 2018

What We Learned from Running a KM "World Cup"

Ilana Botha | Moorhouse Consulting

what-we-learned-from-running-a-km-world-cup

August 13, 2018

.png)

.png)

.png)

.png)